Microbenchmarking in Java with JMH: Digging Deeper

Written on

This is the fifth and last post in a series about microbenchmarking on the JVM with the Java Microbenchmarking Harness (JMH).

part 1: Microbenchmarking in Java with JMH: An Introduction

part 2: Microbenchmarks and their environment

part 3: Common Flaws of Handwritten Benchmarks

In the previous post, I have introduced JMH with a Hello World benchmark. Now, let’s dig a bit deeper to find out more about the capabilities of JMH.

A Date Format Benchmark

In this blog post, we’ll implement a microbenchmark that compares the multithreaded performance of different date formatting approaches in Java. In this microbenchmark, we can exercise more features of JMH than just in a Hello World example. There are three contenders:

- JDK

SimpleDateFormatwrapped in a synchronized block: AsSimpleDateFormatis not thread-safe, we have to guard access to it using a synchronized block. - Thread-confined JDK

SimpleDateFormat: One alternative to a global lock is to use one instance per thread. We’d expect this alternative to scale much better than the first alternative, as there is no contention. FastDateFormatfrom Apache Commons Lang: This class is a drop-in replacement forSimpleDateFormat(see also its Javadoc)

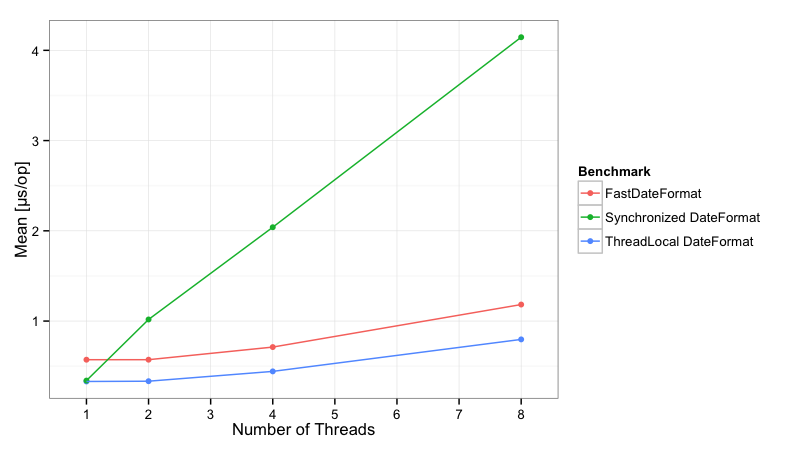

To measure how these three implementations behave when formatting a date in a multithreaded environment, they will be tested with one, two, four and eight benchmark threads. The key metric that should be reported is the time that is needed per invocation of the format method.

Phew, that’s quite a bit to chew on. So let’s tackle the challenge step by step.

Choosing the Metric

Let’s start with the metric that we want to determine. JMH defines the output metric in the enum Mode. As the Javadoc of Mode is already quite detailed, I won’t duplicate the information here. After we’ve looked at the options, we choose Mode.AverageTime as benchmark mode. We can specify the benchmark mode on the benchmark class using @BenchmarkMode(Mode.AverageTime). Additionally, we want the output time unit to be µs.

import org.openjdk.jmh.annotations.*;

import java.util.concurrent.TimeUnit;

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.MICROSECONDS)

public class DateFormatMicroBenchmark {

// more code to come...

}When the microbenchmark is run, results will be reported as µs/op, i.e. how many µs one invocation of the benchmark method took. Let’s move on.

Defining Microbenchmark Candidates

Next, we need to define the three microbenchmark candidates. We need to keep the three implementations around during a benchmark run. That’s what @State is for in JMH. We also define the scope here; in our case either Scope.Benchmark, i.e. one instance for the whole benchmark and Scope.Thread, i.e. one instance per benchmark thread. The benchmark class now looks as follows:

import org.apache.commons.lang3.time.FastDateFormat;

import org.openjdk.jmh.annotations.*;

import java.text.DateFormat;

import java.text.Format;

import java.util.Date;

import java.util.concurrent.TimeUnit;

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.MICROSECONDS)

public class DateFormatMicroBenchmark {

// This is the date that will be formatted in the benchmark methods

@State(Scope.Benchmark)

public static class DateToFormat {

final Date date = new Date();

}

// These are the three benchmark candidates

@State(Scope.Thread)

public static class JdkDateFormatHolder {

final Format format = DateFormat.getDateInstance(DateFormat.MEDIUM);

public String format(Date d) {

return format.format(d);

}

}

@State(Scope.Benchmark)

public static class SyncJdkDateFormatHolder {

final Format format = DateFormat.getDateInstance(DateFormat.MEDIUM);

public synchronized String format(Date d) {

return format.format(d);

}

}

@State(Scope.Benchmark)

public static class CommonsDateFormatHolder {

final Format format = FastDateFormat.getDateInstance(FastDateFormat.MEDIUM);

public String format(Date d) {

return format.format(d);

}

}

}We defined holder classes for each Format implementation. That’s needed, as we need a place to put the @State annotation. Later on, we can have JMH inject instances of these classes to benchmark methods. Additionally, JMH will ensure that instances have a proper scope. Note that SyncJdkDateFormatHolder achieves thread-safety by defining #format() as synchronized. Now we’re almost there; only the actual benchmark code is missing.

Multithreaded Benchmarking

The actual benchmark code is dead-simple. Here is one example:

@Benchmark

public String measureJdkFormat_1(JdkDateFormatHolder df, DateToFormat date) {

return df.format(date.date);

}Two things are noteworthy: First, JMH figures out that we need an instance of JdkDateFormatHolder and DateToFormat and injects a properly scoped instance. Second, the method needs to return the result in order to avoid dead-code elimination.

As we did not specify anything, the method will run single-threaded. So let’s add the last missing piece:

@Benchmark

@Threads(2)

public String measureJdkFormat_2(JdkDateFormatHolder df, DateToFormat date) {

return df.format(date.date);

}With @Threads we can specify the number of benchmark threads. The actual benchmark code contains methods for each microbenchmark candidate for one, two, four and eight threads. It’s not particularly interesting to copy the whole benchmark code here, so just have a look at Github.

Running the Benchmark

This benchmark is included in benchmarking-experiments on Github. Just follow the installation instructions, and then issue java -jar build/libs/benchmarking-experiments-0.1.0-all.jar “name.mitterdorfer.benchmark.jmh.DateFormat.*".

Results

I’ve run the benchmark on my machine with an Intel Core i7-2635QM with 4 physical cores and Hyperthreading enabled. The results can be found below:

Unsurprisingly, the synchronized version of SimpleDateFormat does not scale very well, whereas the thread-confined version and FastDateFormat are much better.

There’s (Much) More

DateFormatMicroBenchmark is a more realistic use case of a microbenchmark than what we have seen before in this article series. As you have seen in this example, JMH has a lot to offer: Support for different scopes for state, multithreaded benchmarking and customization of reported metrics.

Apart from these features, JMH provides a lot more such as support for asymmetric microbenchmarks (think readers and writers), control on the behavior of the benchmark (How many VM forks are created? Which output formats should be used for reporting? How many warm-up iterations should be run?), etc. etc.. It also supports arcane features such as the possibility to control the certain aspects of compiler behavior with the @CompilerControl annotation, a Control class that allows to get information about state transitions in microbenchmarks, support for profilers and many more. Just have a look at the examples yourself, or look for usages of JMH in the wild, such as JCTools microbenchmarks from Nitsan Wakart, the benchmark suite of the Reactor project or Chris Vest’s XorShift microbenchmark.

Alternatives

There are also some alternatives to JMH, but for me none of them is currently as compelling as JMH:

- Handwritten benchmarks: In this posting series, I’ve demonstrated multiple times that without very intimate knowledge of JVM’s inner workings, we almost certainly get it wrong. Cliff Click, who architected the HotSpot server compiler, put it this way:

Without exception every microbenchmark I’ve seen has had serious flaws […] Except those I’ve had a hand in correcting.

- Caliper: An open-source Java microbenchmarking framework by Google. This seems to be the only “serious” alternative to JMH but still has its weaknesses.

- JUnitPerf: JUnitPerf is a JUnit extension for performance tests. It decorates unit tests with timers. Although you might consider it for writing microbenchmarks, it is not as suitable as other solutions. It does not provide support for warm-up, multithreaded testing, controlling the impact of JIT, etc.. However, if all you need is a coarse-grained measurement of the runtime of an integration test, then JUnitPerf might be for you. JUnitPerf can be one of your defense lines against system-level performance regressions, as you can easily integrate these tests in your automated build.

- Performance Testing classes by Heinz Kabutz: Heinz Kabutz wrote a set of performance testing classes in issue 124 of the The Java Specialists’ Newsletter. I would not consider it a fully-fledged framework but a set of utility classes.

Final Thoughts

Although writing correct microbenchmarks on the JVM is really hard, JMH helps to avoid many issues. It is written by experts on the OpenJDK team and solves issues you might not even knew you may have had in a handwritten benchmark, e.g. false sharing. JMH makes it much easier to write correct microbenchmarks without requiring an intimate knowledge of the JVM at the level of an engineer on the HotSpot team. JMH’s benefits are so compelling that you should never consider rolling your own handwritten microbenchmarks.

Questions or comments?

Just ping me on Twitter